Cameron

Member

- Joined

- May 10, 2021

- Messages

- 108

- Reaction Score

- 3

- Points

- 23

- #1

I am somewhat following the advice given in the SEO for XenForo thread here in the forum. Instead of blocking pages in robots.txt though, I'm allowing them to be crawled. I've removed tons of links on my site and any 301 redirects, I'm allowing to be crawled. I'm hopeful that Google will canonicalize to the proper page eventually. So far, it's not doing a very good job, but once the pagerank drops for the page that should be redirected, I think the proper page will be the primary one.

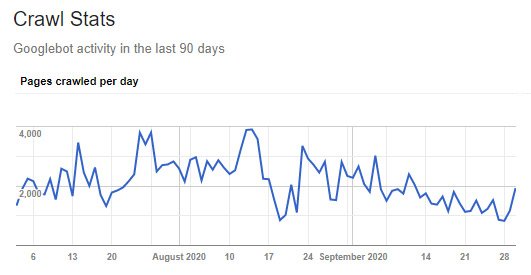

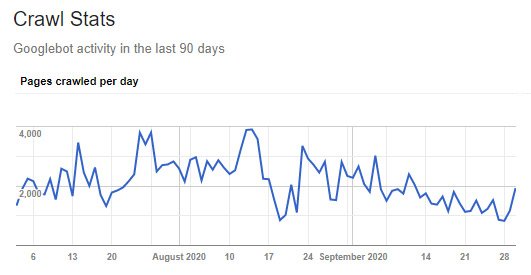

Also, I've removed the links to the member pages and the attachment pages. I have both of them blocked via the permissions in the site admin, so they're returning 403 error pages. I've also removed any other unnecessary links as directed in the above referenced thread. I actually installed this XenForo software as a replacement for some previous classifieds software on the same domain, so about 95% of those old URLs are now returning 410 errors. I mean it to be this way because I would all of those old URLs to disappear from Google's index. So basically, what I'm now seeing in the website's log files is that nine out of ten crawled URLs are errors. Whether they be 301 redirects, 403 forbidden, 410 gone, or 404 not found, they're errors and according to Botify, they're not very favorable to Googlebot. As a matter of fact, when Googlebot encounters these types of errors, it slows down its crawling of a website because it either thinks it's causing the errors by overloading the website or the site isn't worth crawling because it's got so many downed pages. And just in case you're curious, I've got some evidence that Google doesn't like these types of URLs. Check this out. These are my crawl stats for the past few months.

You can see how there's a downward trend going on. I'm actually surprised at that last small increase. That's hopeful.

I really just wanted to write this post to give others a heads up about why their Googlebot crawl rate might by lower than they're used to. If you're running a CMS or a forum that's got lots of 403 forbidden pages or 301 redirects built in, Google isn't going to like that. It doesn't enjoy crawling those types of pages. It also doesn't enjoy crawling pages that aren't live anymore and that are returning 404 or 410 response codes. In my case, there's really nothing I can do about it besides allow Google to crawl all of these pages and wait for them to fall out of the index naturally. Eventually, there won't be any left to crawl and Google will only crawl the pages every few years and at an extremely slow rate. That's what I'm hoping for.

To read up on compliant and non-compliant URLs as they related to SEO, please take a look at these two pages I've linked to below. They were written by an author at Botify and the content on them makes a lot of sense. I'm eager to see the new crawl rate after all of my junk pages are removed, but that's going to take a while. A very painful while. So frustrating.

https://www.botify.com/blog/seo-compliant-urls

https://www.botify.com/blog/crawl-budget-optimization-for-classified-websites

Let me know what you think about all this. Do you have a website with a low crawl rate? Did it used to have a higher one and it recently fell? If so, please let me know as I may have a reason for what's going on. By this point in my life, I'm pretty good at this stuff.

Also, I've removed the links to the member pages and the attachment pages. I have both of them blocked via the permissions in the site admin, so they're returning 403 error pages. I've also removed any other unnecessary links as directed in the above referenced thread. I actually installed this XenForo software as a replacement for some previous classifieds software on the same domain, so about 95% of those old URLs are now returning 410 errors. I mean it to be this way because I would all of those old URLs to disappear from Google's index. So basically, what I'm now seeing in the website's log files is that nine out of ten crawled URLs are errors. Whether they be 301 redirects, 403 forbidden, 410 gone, or 404 not found, they're errors and according to Botify, they're not very favorable to Googlebot. As a matter of fact, when Googlebot encounters these types of errors, it slows down its crawling of a website because it either thinks it's causing the errors by overloading the website or the site isn't worth crawling because it's got so many downed pages. And just in case you're curious, I've got some evidence that Google doesn't like these types of URLs. Check this out. These are my crawl stats for the past few months.

You can see how there's a downward trend going on. I'm actually surprised at that last small increase. That's hopeful.

I really just wanted to write this post to give others a heads up about why their Googlebot crawl rate might by lower than they're used to. If you're running a CMS or a forum that's got lots of 403 forbidden pages or 301 redirects built in, Google isn't going to like that. It doesn't enjoy crawling those types of pages. It also doesn't enjoy crawling pages that aren't live anymore and that are returning 404 or 410 response codes. In my case, there's really nothing I can do about it besides allow Google to crawl all of these pages and wait for them to fall out of the index naturally. Eventually, there won't be any left to crawl and Google will only crawl the pages every few years and at an extremely slow rate. That's what I'm hoping for.

To read up on compliant and non-compliant URLs as they related to SEO, please take a look at these two pages I've linked to below. They were written by an author at Botify and the content on them makes a lot of sense. I'm eager to see the new crawl rate after all of my junk pages are removed, but that's going to take a while. A very painful while. So frustrating.

https://www.botify.com/blog/seo-compliant-urls

https://www.botify.com/blog/crawl-budget-optimization-for-classified-websites

Let me know what you think about all this. Do you have a website with a low crawl rate? Did it used to have a higher one and it recently fell? If so, please let me know as I may have a reason for what's going on. By this point in my life, I'm pretty good at this stuff.