JGaulard said:

Hi - It sounds like you're getting things figured out.

Gotta keep trying...maybe eventually strike gold!

I've got to trust that the person that did the migration did everything properly. He said he'd done 100's of Xenforo migrations (many of them vB to XF). Probably the biggest difference was my request to keep the old website up & running (and that's why it was put into a different server directory).

I'm thinking at that time nothing was said (got overlooked) about blocking the former vB site/server directory it was in...in the robots.txt to prevent Google from continuing to crawl it (when normally the old site would have been deleted from the server...and this sort of wrinkle would never have been a concern).

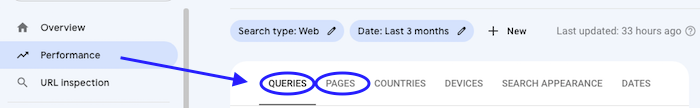

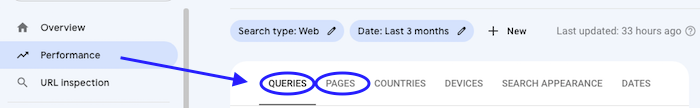

JGaulard said:

I think the confusion on my part was stemming from the terminology we have been using. When you say "old site," it can mean "old site old directory" or "old site new directory."

* When I say "old site"...I'm talking about the original vBulletin site (intact)...but in a different sub-directory than it originally resided.

* When I say "new site"...I'm talking about the XF site that was created (with the imported vBulletin site data/content). And now resides in the root directory of the server.

JGaulard said:

So when you say the old site is now showing all 403 response codes, do you mean the "old site old directory" redirects are showing 403s as opposed to 301s? Or do you mean the "old site new directory" (the one that's been moved and that's not supposed to be seen by anyone) is showing these 403s.

* The new XF site (with the imported data from the vB site)...resides in the root directory of the server...

www.mysite.com

* The former website running vBulletin...now resides in the subdirectory...www.mysite.com/oldsite/.

This /oldsite/ directory ...is a different directory than the vB site used to reside in when the vB site was the ONLY site.

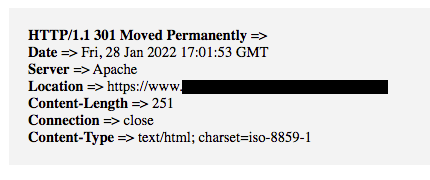

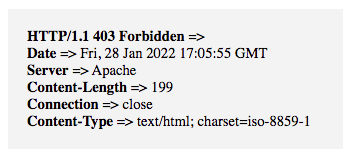

The former vB site (that resides in the

www.mysite.com/oldsite/ directory)...it's these URLs that I placed in the Header Checker to check them...and got all 403 errors for the all the vB site URLs I tested.

To clarify further:

* New XF site resides in the root directory...www.mysite.com

* The directory the former vB site resides in now is...www.mysite.com/oldsite/

* The directory the former vB site USED TO reside in when it was the "live" and ONLY site was...www.mysite.com/forum/

As can be seen...the former vB site now resides in a different sub-directory than it used to (when it was the "live" and only site).

JGaulard said:

From what you've written above, I'm assuming it's the "old site new directory" that's the problem. If so, why not just get rid of it in its entirety? I think you said that you kept it in case you needed it for something, but since such a time has passed, can you now delete both the files and the database? That would solve all of this.

Yes I kept the former vB website up & running just in case I needed to refer to it if I ran into some issues with the new XF site. Like I mentioned earlier...call me a "pack rat"...I hate to get rid of it. "Murphy's Law" would more than likely kick in 1 week after I deleted the old website.

I figure if (now) I can block Google from crawling the directory the former vB website resides in...that will be good enough to stop any possible Google crawling of it that may be happening now & in the future. Then when I'm finally comfortable enough to delete the former vB sites directory...then I'll delete it.

JGaulard said:

As long as those 301 redirects are working properly in your .htaccess files.

This is probably getting beyond my level of expertise. I find the .htaccess file a place I shouldn't mess with. Where if something is messed with in the .htaccess file...and it's done incorrectly...serious things can be messed up!

I did review the current .htaccess file...and the person that set things up placed a lot of comments in there (I believe comments start with the start with the...#...character).

I'm also not 100% sure what a 301 redirect in an .htaccess file should look like. But the only line in my .htaccess file that does not start with the # comment character...and does have...

301...in it...is this one line:

RewriteRule ^(.*)$ https://%{HTTP_HOST}%{REQUEST_URI} [L,R=301]

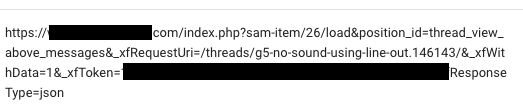

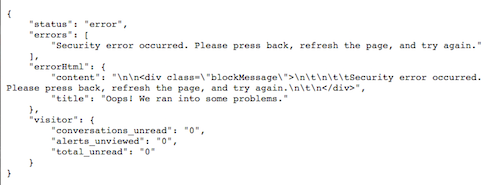

If you were expecting more...or something different...maybe this is where the special Xenforo add-on I mentioned above takes over:

JGaulard said:

Also, you don't need to add the asterisk if you want to block the directory I mentioned above. You can simply add it to the list of blocks that you've already put in there.

I think I'll add what you mentioned above:

User-agent: *

Disallow: /new-directory/

I think I understand it better...and it matches what I found searching the internet earlier today.

Do I need to add these 2 lines anywhere special in the robots.txt...so it functions properly?

JGaulard said:

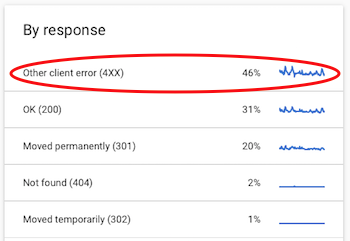

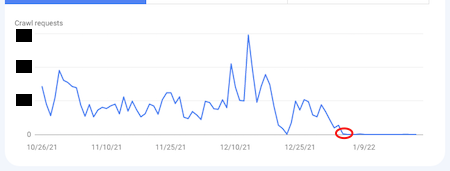

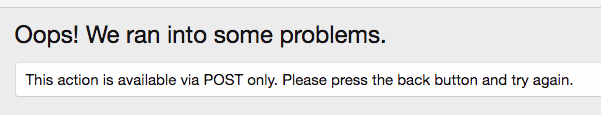

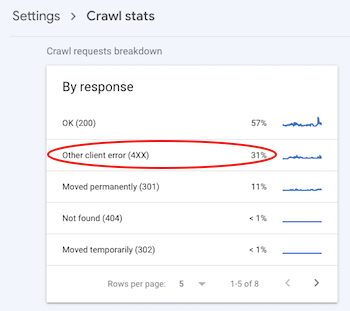

I'm wondering how much Google has been crawling this sister site of yours. If it's been hitting it hard and all it's been getting are 403 pages, yes, from what I've seen, that will definitely affect your crawl rate and the quality of your site in Google's eyes (in my humble opinion). Let's just say it can't be good, so I'm happy we're getting to the bottom of this issue.

I've been wondering this as well. Any forum content (before the Summer 2019 migration)...I'm thinking would be seen by the Google crawler as "duplicate content". All forum threads pre-Summer 2019 (vB site and XF site)...would be EXACTLY the same. I'm thinking Google crawler would not be liking this!

Plus since the former vB site would be seeing much less new content (as compared to the XF site)...I think if a site is not generating much new content...Google crawler crawls it less.

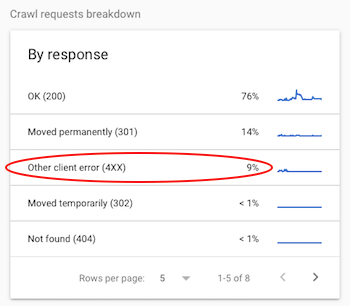

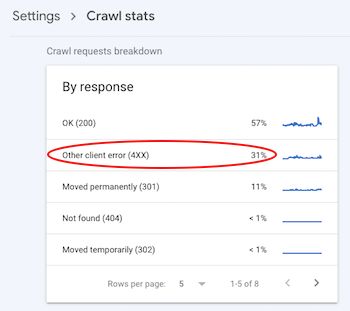

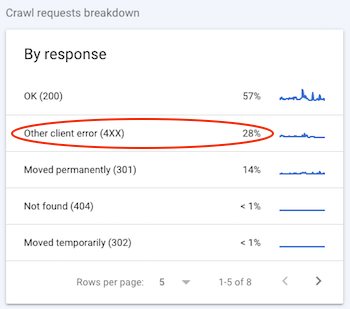

But then again (not 100% sure about this)...Google crawler may see both sites (vB & XF) as 2 parts of a single site. And maybe all of the Google crawl data for everything goes into "one bucket"...and this is why the "

By Response: Other Client Error (4xx)" statistic in Google Search Console is so messed up (all the 403 errors from the vB site are messing things up for the XF site).

Also...if the former vB site is "gobbling up" some/bunch of the website crawl budget...this could explain if some/many of the good-content-threads on the XF site are not getting crawled & indexed properly (showing up as "Valid URLs" in Google Search Console).

And maybe explain why in Google Analytics the #2 most visited forum page on the site is from 2010 (this doesn't make sense). Content from 2010 would be totally irrelevant in 2022...and I doubt many folks in 2022 are "Googling" for information from 2010 (and it be the #2 most visited page for the site)! Lol

Thanks!