Alfuzzy said:

From what you've seen with your sites...what should the process/progression be for these robots.txt blocked pages over time?

Will Google crawl these pages again (plus more the next crawl)...or does Google say "Ok these 800+ pages are blocked by robots...I'll skip these...and start where I left off".

Then maybe next update in Google search console the 800 blocked pages may grow to 1200 (for example)?

Okay, just for some background, I've run classifieds websites since 2004. Classifieds are notorious for having oodles and oodles of junk pages as well as lots of options for users to take advantage of. They're sort of like forums. Tons of great stuff (links and pages) for web surfers, but a real mess for search engines to try to figure out. It's this experience I use to guide me today. I have done things that have caused my rankings to utterly collapse and then to scream back better than they ever were. I've dealt with this sort of thing way too much. Just so you know where I'm coming from.

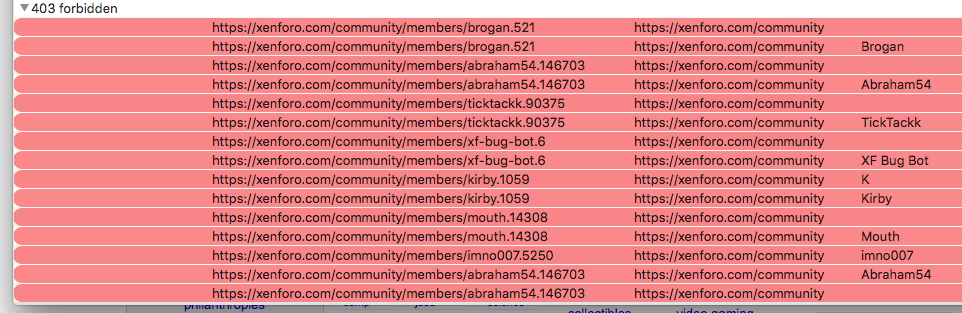

I can tell you with certainty that I have never had luck allowing pages that are thin (respond to seller, print friendly, member pages, etc...) or with noindex on them to be crawled. Some people say, "Yeah, put the noindex attribute on it and it should be okay." If you're dealing with duplicate content and that's what you're suffering from, that's one thing. In our cases, that's not the problem. We're dealing with not only crawl budget issues, we're also dealing with

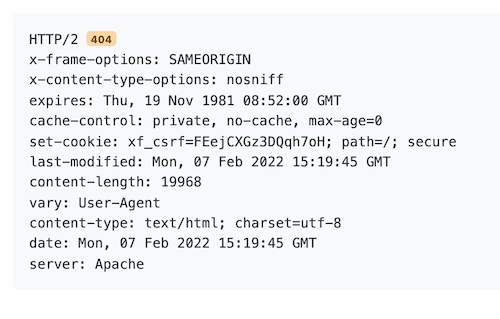

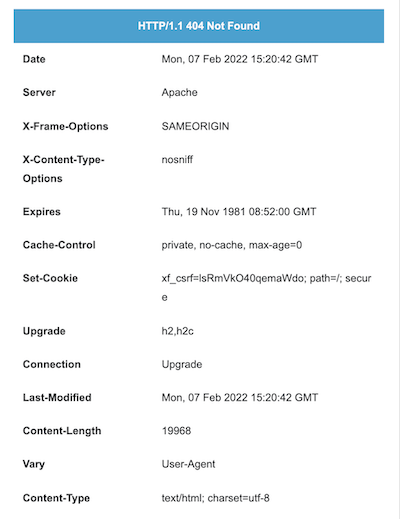

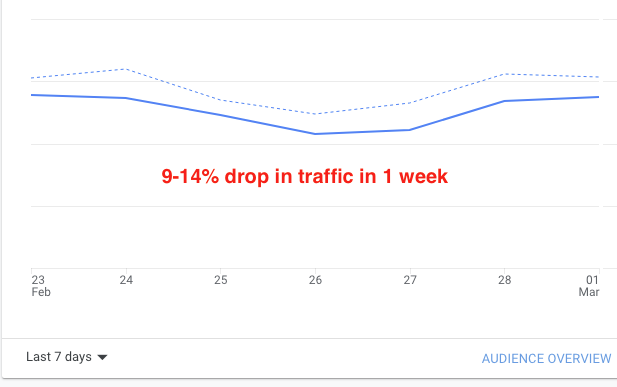

crawl demand issues. Google seems to have plenty of budget for our websites. The problem is, when it does crawl many of XenForo's pages, it says, "Yuck, I'll keep crawling slowly, but I'm not going to include much in my actual index." That's what those two graphs in the Search Console are telling us. The

Crawled but Not Indexed and

Discovered but Not Indexed. Yes, Googlebot has the willingness to crawl all those pages (albeit very lazily), but it's simply not indexing them due to other reasons. Those reasons seem to include low quality pages, such as ones that return 403 and perhaps 301 responses. I'm sure of the 403 and I'm still testing the 301.

To answer your first question, there are a few different factors at play. If you're running the default template, you've likely got a link at the top that says

What's New. The pages contained in that directory contain the noindex tag, so you won't see them in any search results. On some of my sites, Googlebot never had any interest in crawling any of them. On another, it crawled over 200,000 of them. 200,000 you ask? Yes. The pages contained within that /whats-new/ directory replicate based on user sessions (or simple clicks). If you go in there and click the

New Posts link and then click out somewhere else and then go back in and click that

New Posts link again, you'll see the URL change. There's a new number in it. Now, pretend you're a search engine clicking and clicking and clicking. Googlebot goes in once and sees one URL and then goes back and sees another, thinking it's unique. This goes on forever. It'll crawl those URLs all day long. It's a wonder it has time to crawl anything else. This directory is absolutely the most important to be blocked.

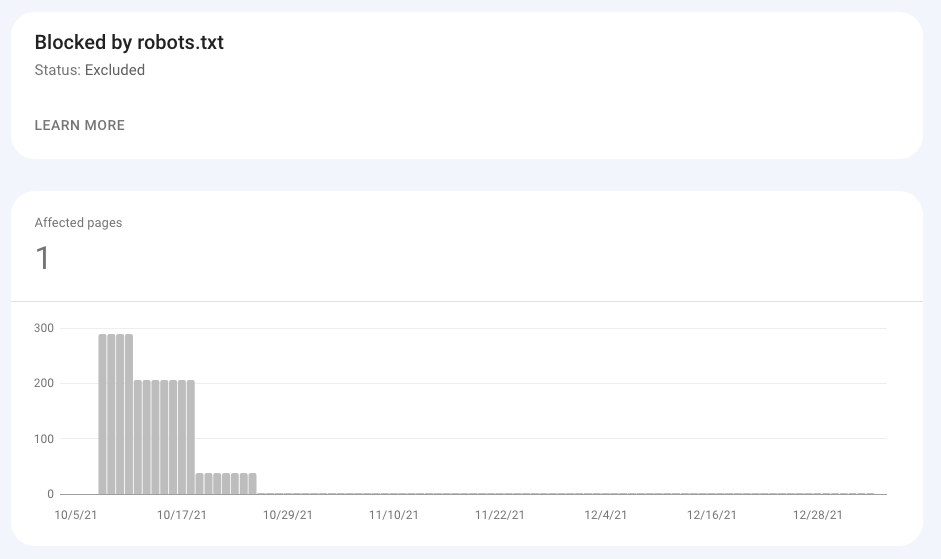

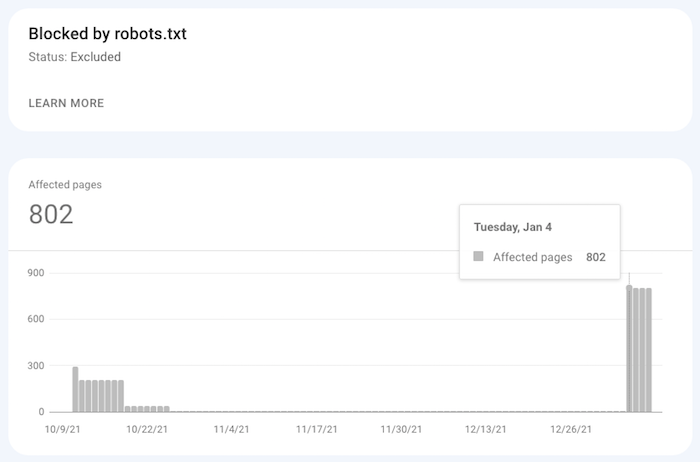

There is one link pointing to this directory that's got all the crawled pages contained within it. Google knows how many pages it crawled. In my case, it was around 200,000. That's a shame because in actuality, there were only a handful of unique pages. Anyway, once that directory is blocked, Googlebot will stop crawling and those pages it did crawl and the URLs will appear on your

Blocked by robots.txt graph. Over time, (let's pretend that's the only directory you blocked) that graph will grow as Google sees each URL it has already crawled on its scheduler. When a URL appears on the scheduler and it's blocked in the robots.txt file, it'll end up on the graph and it won't need to be crawled again (or appear on the scheduler again). It'll just sit there in stasis. After about 3 months, it'll fall out of the graph. I've seen this time and time again and it does seem to be around 90 days. So, for example, if every single crawled page in that directory appears on your graph tomorrow, the graph would jump to 200,000 pages, not grow any more from that, and then, after three months, fall back to zero. The pages would be gone. History, as if they were deleted. The thing is, the only reason those URLs would fall out of the graph is because they're not linked to anymore. The only one that's linked to is the

What's New link in the header of the site. So the moral of this story is, when pages aren't linked to (when Googlebot can't get to a link), the page will eventually fall out of the graph.

Other links, such as the member pages and the attachment pages (if you're using them) are still linked to, so they may stay in the graph forever. Googlebot will see those links and always want to crawl them, so they'll hang around. Although, from what I've been seeing in recent years, they do seem to be disappearing like the others do. So yes, you'll see that graph rise as far as it can go, meaning, Google will block as many pages as you're telling it to block (it can only block so many - you have a finite number of pages) and then the graph should plateau and then begin to decrease. That is, unless you've got an insanely busy forum that is creating large numbers of new pages to block everyday.

Also, yes, it'll say, "I've already seen that these pages are blocked, so I'll move onto other ones." The process seems to accelerate and then decelerate, depending on the day. Some of mine are being blocked at a rate of 10% growth some days and then 100% others. I guess it depends where they are on the scheduler. Also, as time is going on, my crawl rate is increasing, so I think the scheduler is picking up steam.

Alfuzzy said:

When you say "run out of blocked pages". Does this mean when Google has finally crawled the whole site...has found each & every page that's supposed to blocked by robots.txt...and this is theoretically the end for total robots.txt blocked pages (will see the highest number of blocked pages on the graph)?

Yes.

Alfuzzy said:

If this is true...then what happens:

1. Does this peak value for blocked pages stay there forever (unless something gets modified)?

2. Or does the blocked pages number start to decrease as Google says..."Ok I've crawled or notated these blocked pages enough now...and will no longer track them"...and the blocked pages number starts to decrease?

I talked about this above. Pages that are contained within a sealed directory like the /whats-new/ directory will eventually disappear in their entirety while other accessible URLs may hang around a lot longer. The very first Pagerank algorithm stated that pages that are blocked won't be counted in the website's overall Pagerank score though, so that's good. Who knows if that still holds today.

Alfuzzy said:

I've always heard 4xx errors are more important to fix. If 403 errors are mostly associated with (from internet search via this link):

In your website's permissions, you've most likely got it set so guests (folks who aren't logged in, such as search engines) can't see member pages and attachment pages. When someone clicks on a member avatar or link and tries to see their profile, they're sent to a "You Must Log In to Do This" page as opposed to the member's profile page. The status code of that login page is a 403 as opposed to a 200. All that means is that the user needs authentication to see the content of the page. Also, when a user tries to click on an image and they're not logged in, they'll go to the same login page. More 403s. In Google's eyes, 403, 401, 410, and 404 are all the same thing. They're dead pages that can't be indexed. These are the ones that reduce crawl demand. If you ran a previous forum that didn't use these 403 pages, you're crawl rate may have been 10,000 pages per day. Now that you've got 50% of your crawled pages showing 403 status codes, your crawl rate is probably about a tenth of that. Error status codes kill crawl demand. Not crawl budget - your budget (possible pages crawled) is big, but the demand is small. You're essentially linking to pages that aren't there and Googlebot doesn't like it at all.

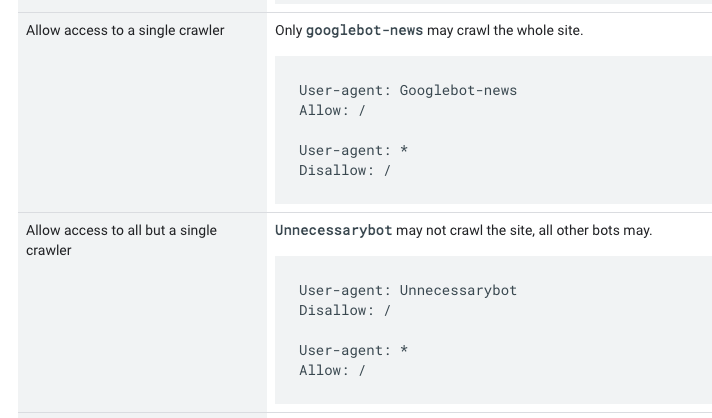

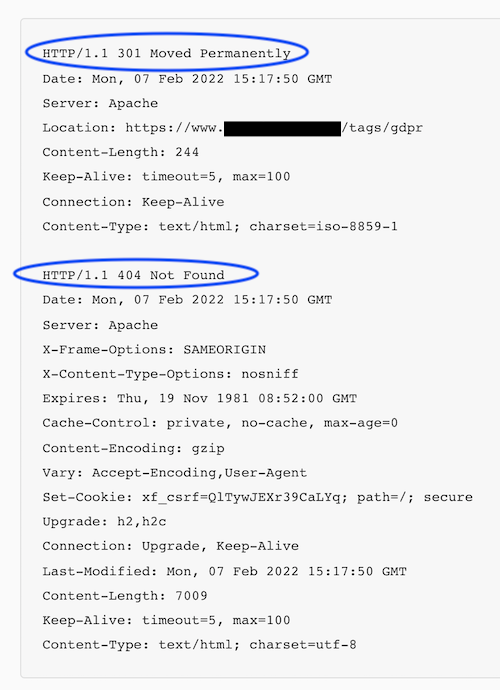

Now, 301 response codes mean that the page has permanently moved. Google treats these just fine. It'll see a link to a page that's been moved, follow the 301 redirect, and then visit the new URL. It's perfectly normal. The old URL is like a link to the new one. The problem is, XenForo forums use tons of these redirects all throughout their websites for various reasons.

Take a look at these four links that are on my website here:

https://gaulard.com/forum/threads/91/

https://gaulard.com/forum/threads/91/latest

https://gaulard.com/forum/threads/91/post-330

https://gaulard.com/forum/goto/post?id=329

Go ahead and click on them and you'll see they go to the same page. The URL may have a hashtag in it, but Google ignores those. In the case of these 301 redirects, Google doesn't have a problem with them. The problem we're having is that because there are so many of them, Googlebot is crawling them instead of crawling our good canonical URLs. Basically, we're burning through our allotted crawl budget because of these redirects.

Now, what I'm testing currently is whether the crawl demand will pick back up because the 403 pages are being blocked. Will I get back to a healthy demand and will those 301 redirects not really have an impact? Time will tell.

Alfuzzy said:

I'm reading that 301 redirects are when an old page has moved to a new/updated URL. Does this happen on our sites (and we need to write a redirect to correct things)...or are 301's mostly when we have a link on our sites...and when the link is clicked a visitor is sent to the wrong/old/bad URL? Or am I confused as far as 301's are concerned?

All the 403 and 301 URLs we are seeing are caused by the software. They're meant to be there. They improve functionality. It's not server related at all. With your updated robots.txt file you have now, all crawling for all of them should stop. You should see those two graphs begin to decline within weeks. The only thing that concerns me has to do with Pagerank flow because of blocking those 301s. We can discuss that in another thread though. Or here. If you're interested in it, just ask.

I hope this helps. If you need anything clarified further, just let me know. Talk about long winded!